A Moral Compass for Machines

Self-Control for Robots

AI Works Better When It Remembers

We don’t build robots. We make them trustworthy.

Deploy in days, not months. Packs run on any hardware — no cloud, no re-coding

What We Build

Self-Control Skill Packs for Robots

Pre-built decision skills that make robots act safely and predictably in real environments.

Deployed in days. Works on humanoids and other robots — any hardware.

Fleet-Wide Learning

One robot learns, the whole fleet improves.

No retraining. No cloud.

Why It Matters

The Problem: Robots follow instructions blindly.

Edge cases break trust. Deployment stalls for months.

With Partenit:

- Decisions checked before execution

- Knowledge transfers across robots

- Fewer failures in edge cases

- Explainable, offline-ready AI

Who This Is For

Humanoid OEMs, robotics platforms, and teams deploying robots in real environments — logistics, manufacturing, healthcare, and beyond.

We’re the self-control layer for robots.

Hardware makers build bodies.

We make robots act responsibly.

Robots Forget. We Give Them Self-Control

From Empty Robot to Skilled Worker in Days

Decisions That Persist. Behavior You Can Trust

How It Works — And Why Robots Need Self-Control, Not Just AI

Robots Without Self-Control: Unsafe by Default

-

Execute plans blindly, without consequence checks

-

Require constant human supervision

-

Fail in edge cases and uncertainty

How Partenit Adds Self-Control

Step 1: Pre-Execution Evaluation

Before acting, robots evaluate proposed actions against rules, constraints, and physical limits — before anything moves.

Step 2: Uncertainty & Risk Handling

When information is incomplete or risk is too high, robots pause, modify the action, or refuse to proceed — instead of guessing or forcing execution.

Step 3: Predictable Decision Memory

Robots retain decisions, constraints, and outcomes as structured experience — enabling consistent, explainable behavior across tasks and environments.

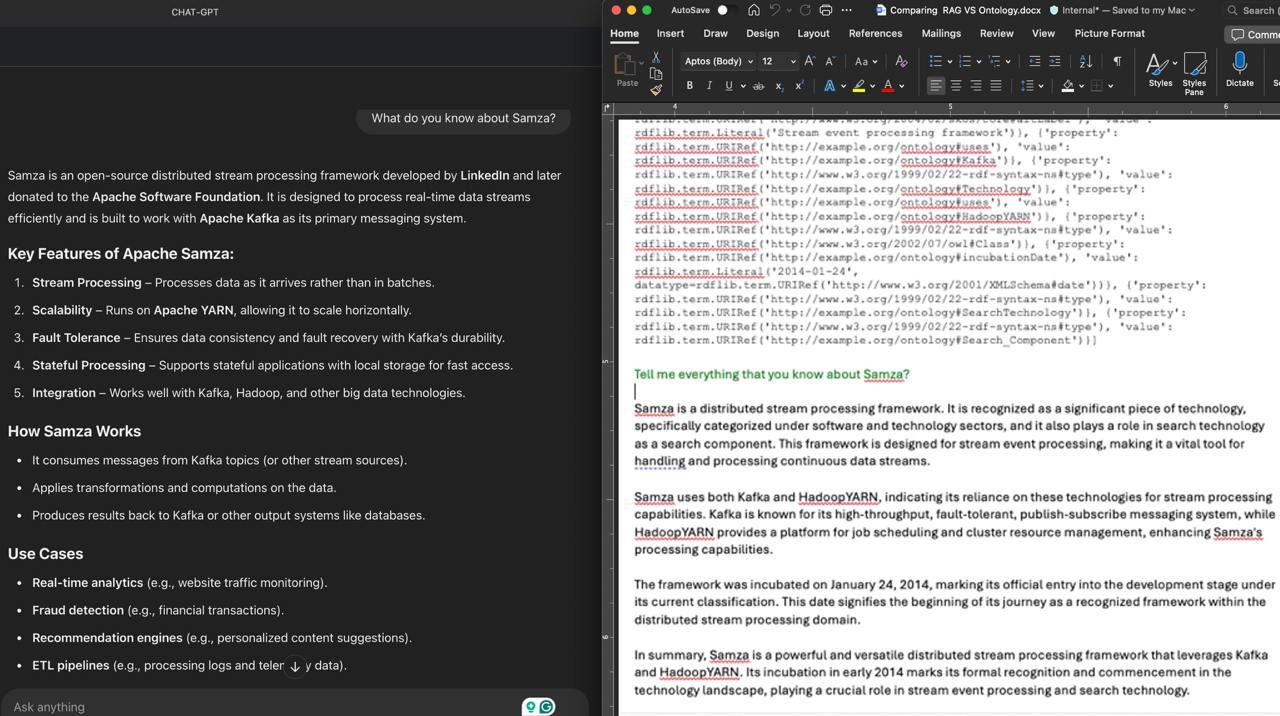

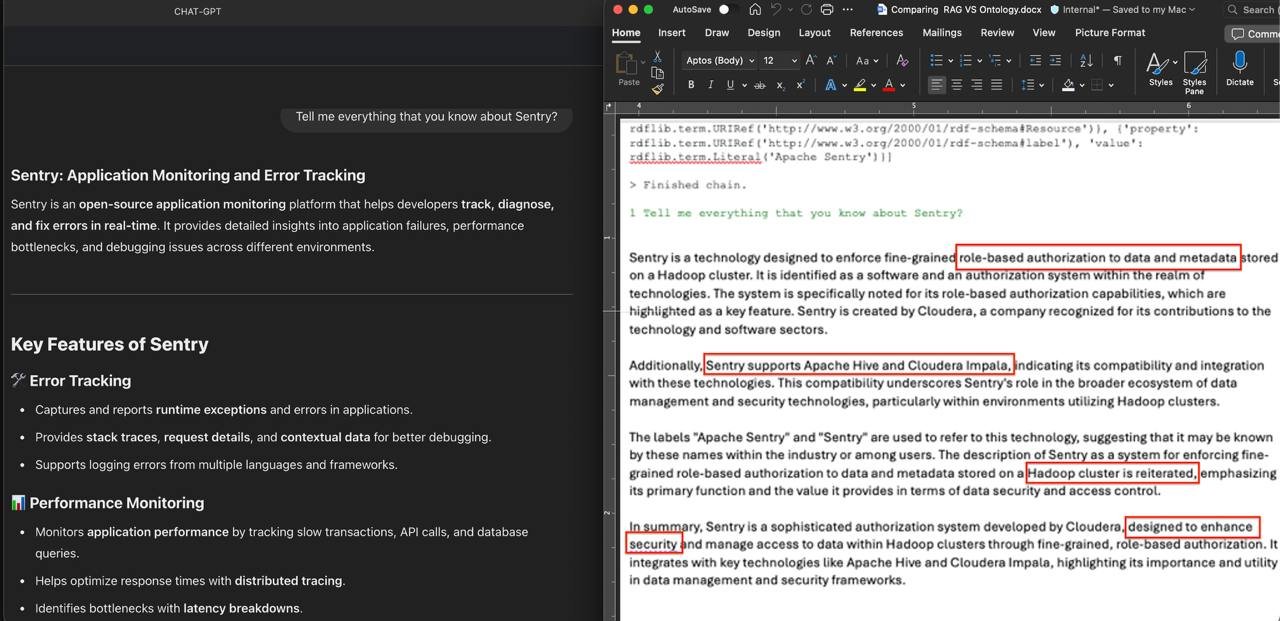

Ontology vs. Large Language Model: How to Get More While Spending Less

Left: Response from a powerful (and expensive) GPT model. Right: Response from a simple ontology query. Same accuracy – dramatically lower cost.

Efficient Performance

Even simple queries to a well-structured ontology can produce results comparable to those of powerful (and costly) large language models — while dramatically reducing compute load and token expenses.

Accuracy and Reliability

Ontology-based retrieval delivers highly precise and consistent results, which is critical in sensitive domains such as healthcare, finance, and legal services where accuracy is non-negotiable.

Transparency and Explainability

Answers derived directly from an ontology are fully transparent and verifiable — unlike probabilistic outputs from large language models. This ensures trust, regulatory compliance, and interpretability.

Ease of Integration

Ontology-based memory can be integrated into existing systems faster and with less complexity than LLM pipelines, making it an ideal choice for organizations seeking rapid deployment without heavy infrastructure costs.

Industries & Use Cases

Warehouse & Logistics

Pre-execution decision control for picking, transport, and charging under real-world constraints.

Healthcare & Assistive Robotics

Robots prioritize human safety, detect uncertainty, and stop instead of guessing in patient environments.

Autonomous Mobile Robots (AMRs)

Decision layer prevents deadlocks, unsafe shortcuts, and mission failure under partial observability.

Humanoid Robots (General Purpose)

Robots evaluate actions before execution, refuse unsafe commands, and behave predictably around humans.

Inspection & Maintenance

Robots decide when not to act in risky or uncertain conditions, preventing irreversible damage.

Extreme & Hazardous Environments

Robots abort missions early when uncertainty exceeds safe thresholds — protecting hardware and surroundings.

Manufacturing & Assembly

Robots pause, adapt, or abort actions when tolerances, tools, or conditions change unexpectedly.

Construction & Heavy Environments

Robots assess stability, load, and risk before movement — not after a failure.

Research & Robotics R&D

A controllable decision layer for testing, validating, and explaining robot behavior before real deployment.

Where AI Memory Makes the Difference

From warehouses to healthcare, robots need more than algorithms — they need professions and memory. Partenit: DeepContext AI turns fragmented actions into long-term knowledge, enabling robots to work with context, precision, and adaptability.

NeoIntelligent Robotics: Memory as Evolution

Robots transcend programming. Our ontological memory transforms machines from rigid executors into adaptive, learning entities that accumulate experience like living organisms. Each interaction becomes a neural pathway, creating machines that understand context, not just commands.

Professional Knowledge Amplification

Imagine expertise that never forgets. Doctors, lawyers, engineers gain an intelligent archive that doesn’t just store information, but actively interprets, connects, and surfaces insights across massive knowledge landscapes in milliseconds.

Corporate Intelligence Networks

Knowledge transforms from static data pools into dynamic, interconnected ecosystems. Our multi-layered ontological memory turns complex information into living, breathable intelligence – where insights emerge organically, not through mechanical querying.

Autonomous Learning Ecosystems

We don’t just help machines remember – we teach them to think. Partenit memory enables systems to recognize patterns, predict challenges, and autonomously adapt their behavior, creating a new paradigm of machine consciousness.